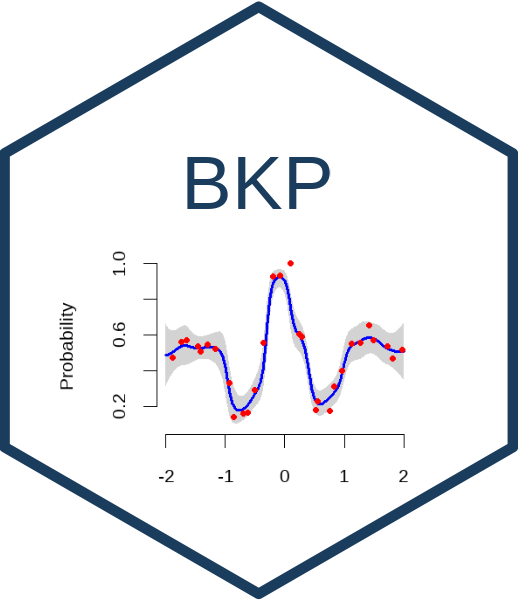

Beta Kernel Process

The Beta Kernel Process (BKP) provides a flexible

and computationally efficient nonparametric framework for modeling

spatially varying binomial probabilities.

Let

denote a

-dimensional

input, and suppose the success probability surface

is unknown. At each location

,

the observed data is modeled as

where

is the number of successes out of

independent trials.

The full dataset comprises

observations

,

where we write

and

for brevity.

Prior

In line with the Bayesian paradigm, we assign a Beta

prior to the unknown probability function:

where

and

are spatially varying shape parameters.

Posterior

Let

denote a user-defined kernel function measuring the similarity between

input locations.

By the kernel-based Bayesian updating strategy, the BKP model defines a

closed-form posterior distribution for

as

where

and

is the vector of kernel weights.

Posterior summaries

Based on the posterior distribution above, the posterior

mean is

which serves as a smooth estimator of the latent success

probability.

The corresponding posterior variance is

which provides a local measure of epistemic uncertainty.

These posterior summaries can be used to visualize prediction quality

across the input space, particularly highlighting regions with sparse

data coverage.

Binary classification

For binary classification, the posterior mean can be thresholded to

produce hard predictions:

where

is a user-specified threshold, typically set to

.

Dirichlet Kernel Process

The Dirichlet Kernel Process (DKP) naturally extends

the BKP framework to multi-class responses by replacing the binomial

likelihood with a multinomial model and the Beta prior with a Dirichlet

prior .

Let the response at input

be

where

denotes the count of class

out of

total trials. Assume

with class probabilities

Prior

A Dirichlet prior is placed on

:

where

are prior concentration parameters.

Posterior

Given training data

,

define the response matrix as

The kernel-smoothed conjugate posterior distribution becomes

Posterior mean

The posterior mean

provides a smooth estimate of the class

probabilities.

Categorical classification

For classification tasks, labels are assigned by the maximum a

posteriori (MAP) decision rule: